Human-Machine Interactions: Bots Are More Successful if They Impersonate Humans

Study examines humans’ willingness to cooperate with bots

An international research team including Iyad Rahwan, Director of the Center for Humans and Machines at the Max Planck Institute for Human Development in Berlin, sought to find out whether cooperation between humans and machines is different if the machine purports to be human. They carried out an experiment in which humans interacted with bots. In the study now published in Nature Machine Intelligence, the scientists show that bots are more successful than humans in certain human-machine interactions—but only if they are allowed to hide their non-human identity.

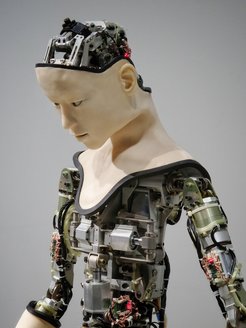

The artificial voices of Siri, Alexa, or Google, and their often awkward responses, leave no room for doubt that we are not talking to a real person. The latest technological breakthroughs that combine artificial intelligence with deceptively realistic human voices now make it possible for bots to pass themselves off as humans. This has led to new ethical issues: Is bots’ impersonation of humans a case of deception? Should transparency be obligatory?

Previous research has shown that humans prefer not to cooperate with intelligent bots. But if people do not even notice that they are interacting with a machine and cooperation between the two is therefore more successful, would it not make sense to maintain the deception in some cases?

In the study published in Nature Machine Intelligence, a research team from the United Arab Emirates, USA, and Germany involving Iyad Rahwan, Director of the Center for Humans and Machines at the Max Planck Institute for Human Development, asked almost 700 participants in an online cooperation game to interact with a human or an artificial partner. In the game, known as the prisoner’s dilemma, players can either act egotistically to exploit the other player, or act cooperatively with advantages for both sides.

The crucial aspect of the experiment was that the researchers gave some participants false information about their gaming partner’s identity. Some participants interacting with a person were told they were playing with a bot, and vice versa. This allowed the researchers to examine whether humans are prejudiced against gaming partners they take for bots and whether it makes a difference to bots’ efficiency if they admit that they are bots, or not.

The findings showed that bots impersonating humans were more successful in convincing their gaming partners to cooperate. As soon as they divulged their true identity, however, cooperation rates decreased. Translating this to a more realistic scenario could mean that help desks run by bots, for example, may be able to provide assistance more rapidly and efficiently if they are allowed to masquerade as humans. The researchers say that society will have to negotiate the distinctions between the cases of human-machine interaction that require transparency and those where efficiency is key.

Original Publication

Ishowo-Oloko, F., Bonnefon, J.-F., Soroye, Z., Crandall, J., Rahwan, I., & Rahwan, T. (2019). Behavioural evidence for a transparency–efficiency tradeoff in human–machine cooperation. Nature Machine Intelligence. doi:10.1038/s42256-019-0113-5